200+ LLMs. One secured collaborative workspace.

The simplest and most powerful way for teams to use GPT, Claude, Gemini, Llama, Mistral, DeepSeek, Qwen, and more – all inside a single workspace with consistent workflows, enterprise-grade controls, and seamless model switching.

Trusted by enterprises

Why Teams Need More Than One AI Model

No single AI model is best at everything. Some excel at reasoning, others at writing, coding, or speed.

High-performing teams need the flexibility to choose the right model at the right moment — without losing context or switching tools.

Key Features

WorkLLM doesn’t just offer access to multiple models — it unifies them into a workspace designed for clarity, structure, and real-world team workflows.

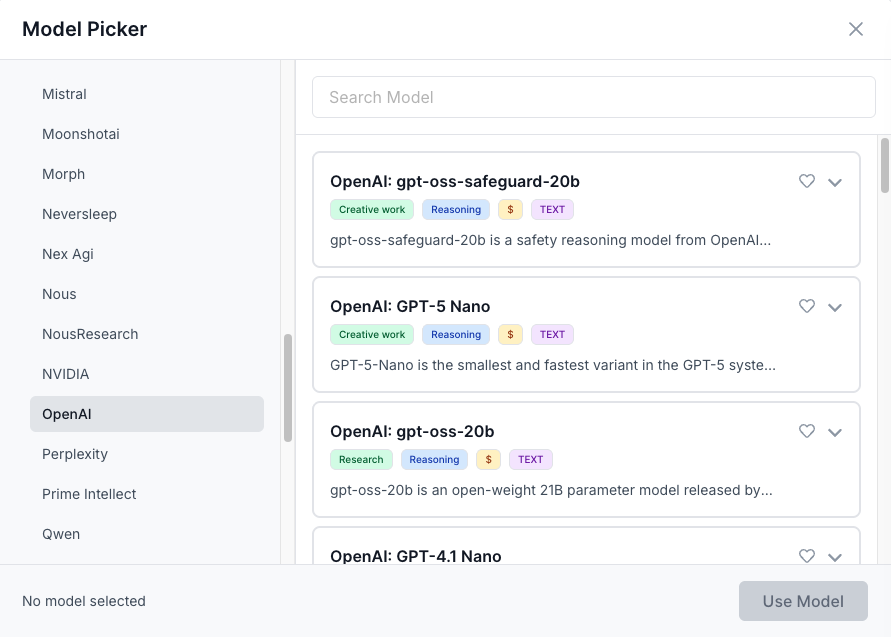

Unified Workspace for 200+ Models

GPT, Claude, Gemini, Llama, Mistral, DeepSeek, Qwen, and private enterprise models all work inside the same clean interface — no switching tools, no repeated prompts, no fragmented history.

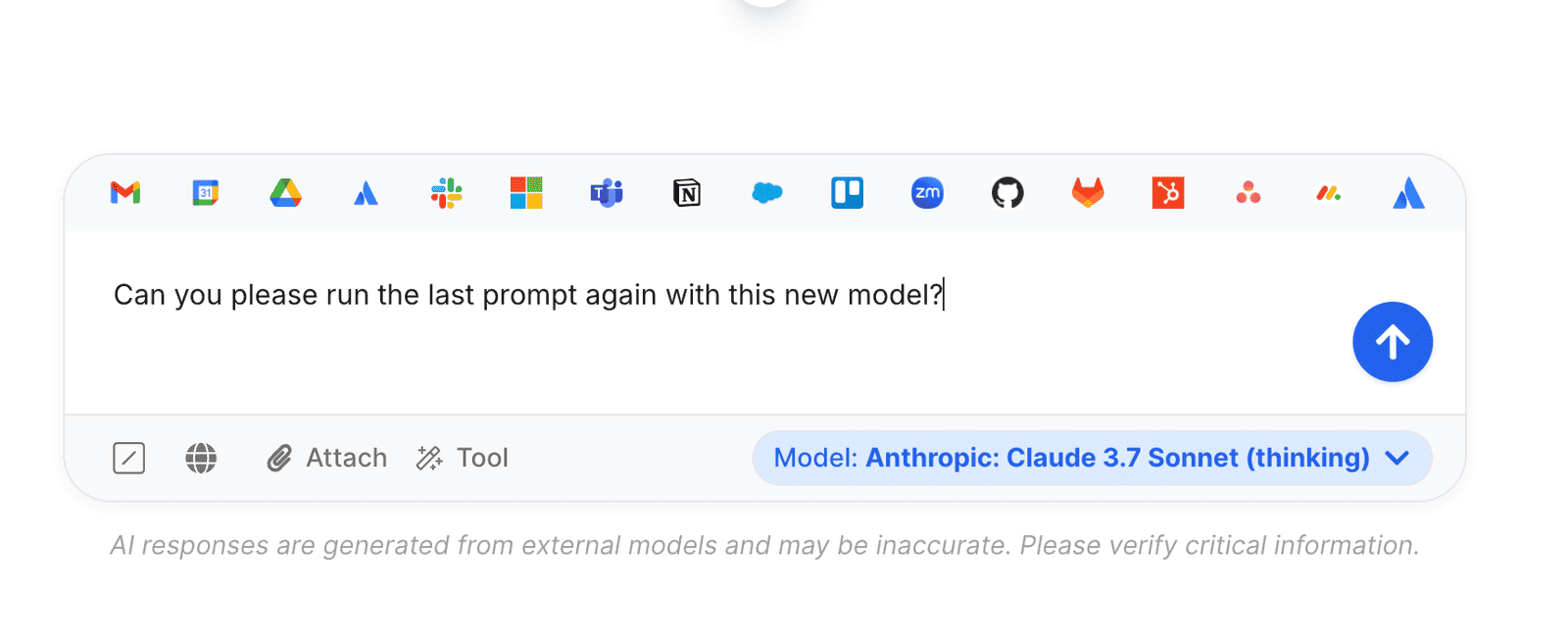

Switch Models Without Losing Context

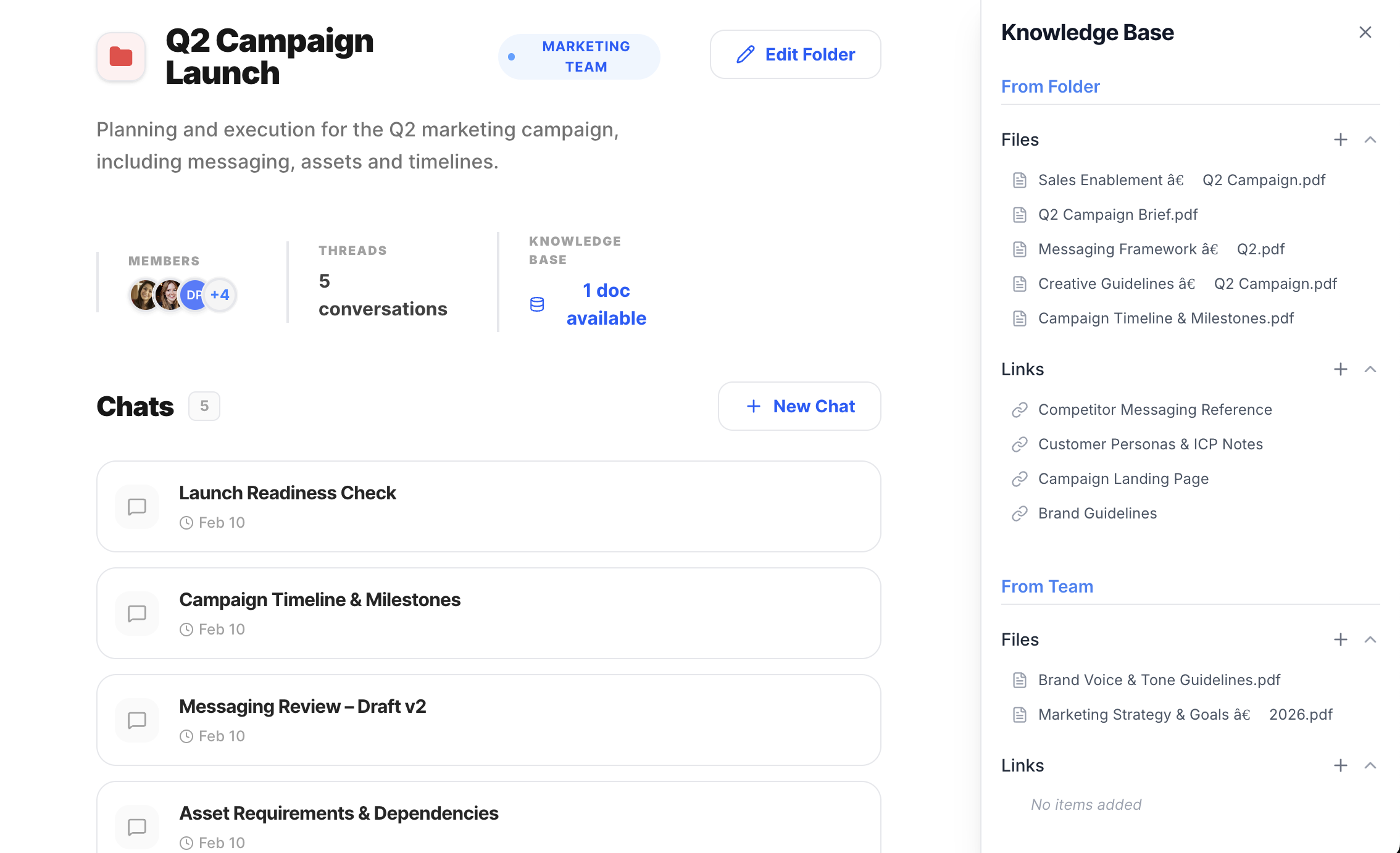

Projects That Scale Your Work

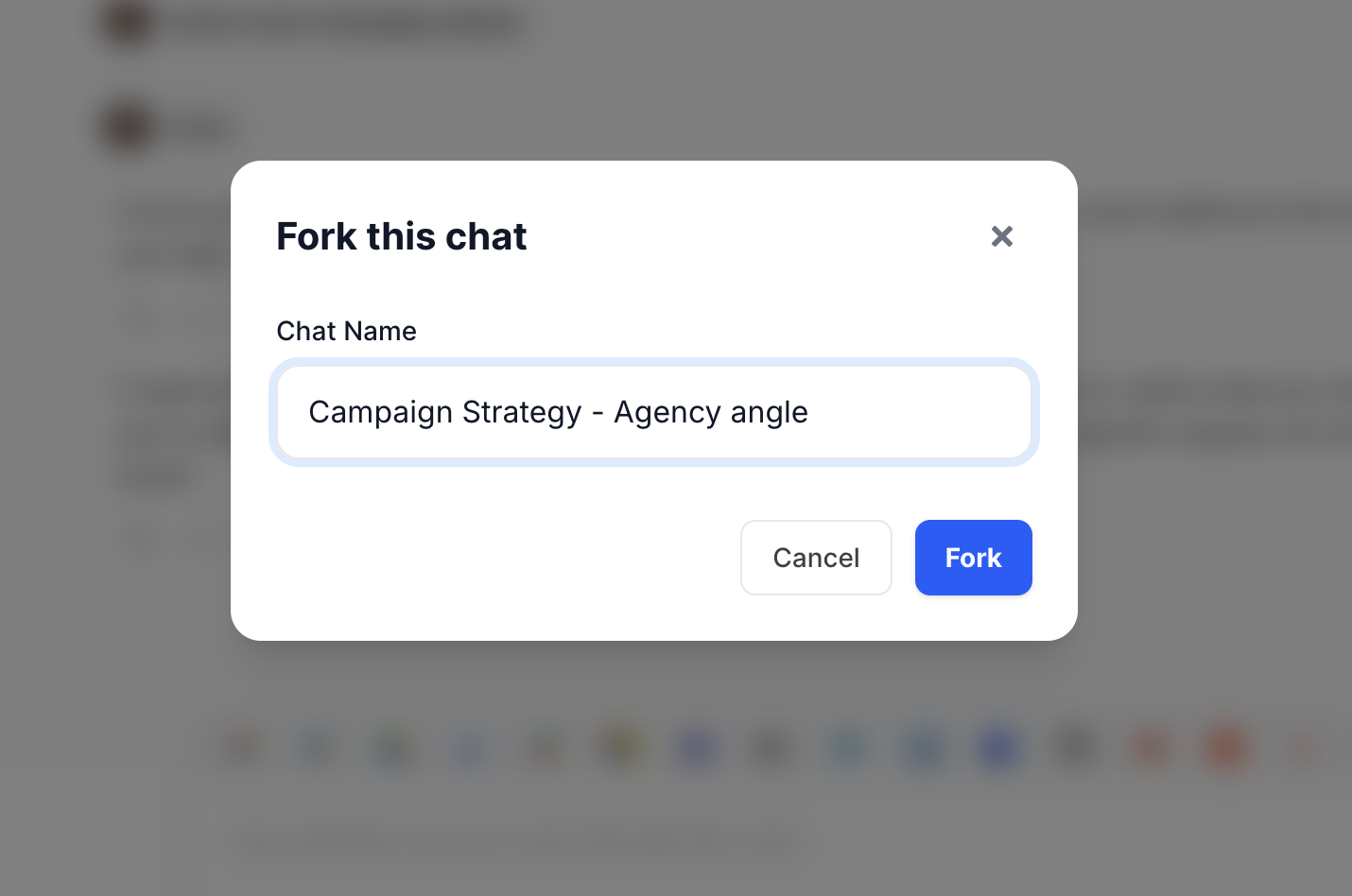

Fork Conversations to Explore Alternatives

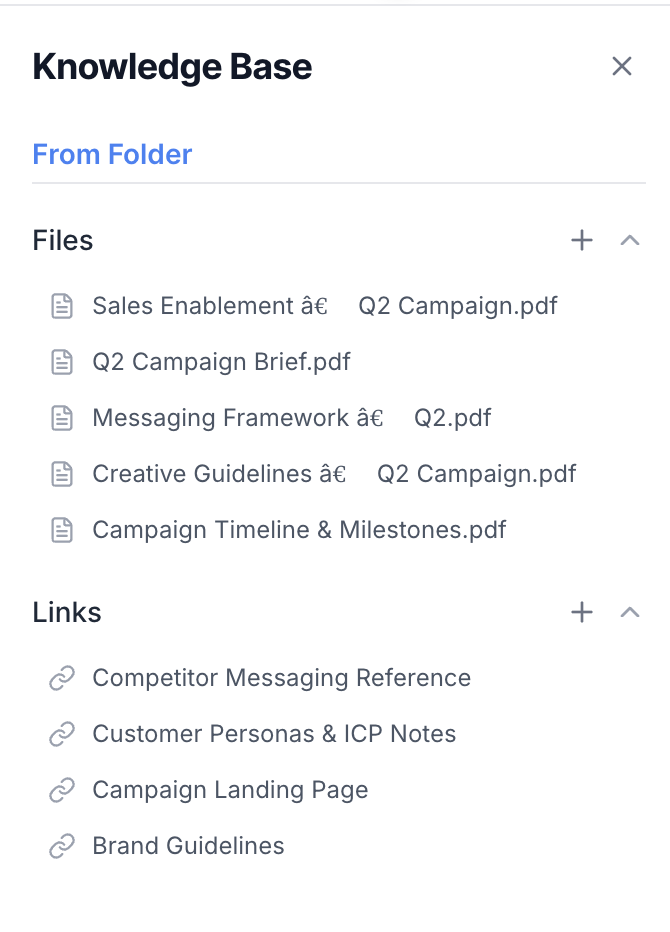

Project-Level Knowledge Sources

Search That Works Across Everything

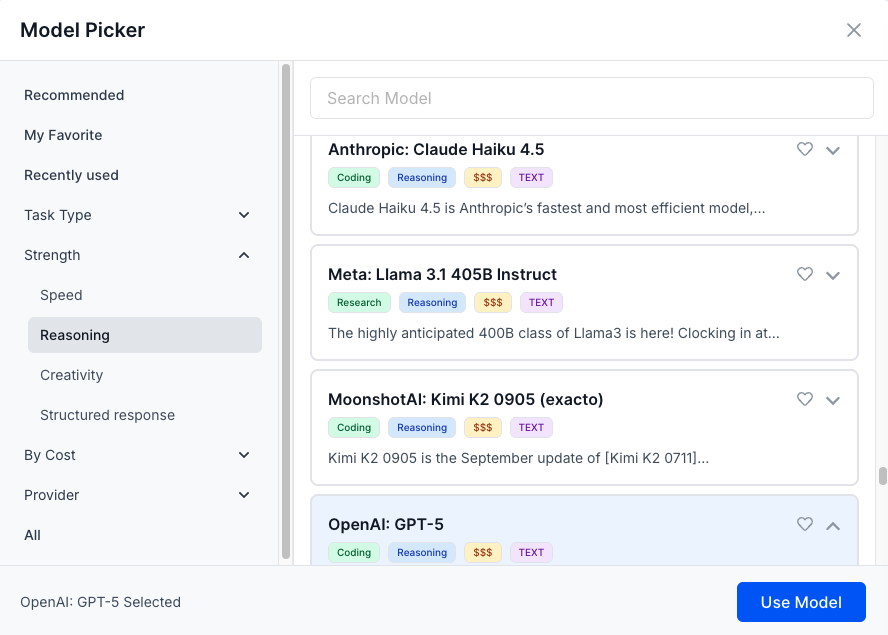

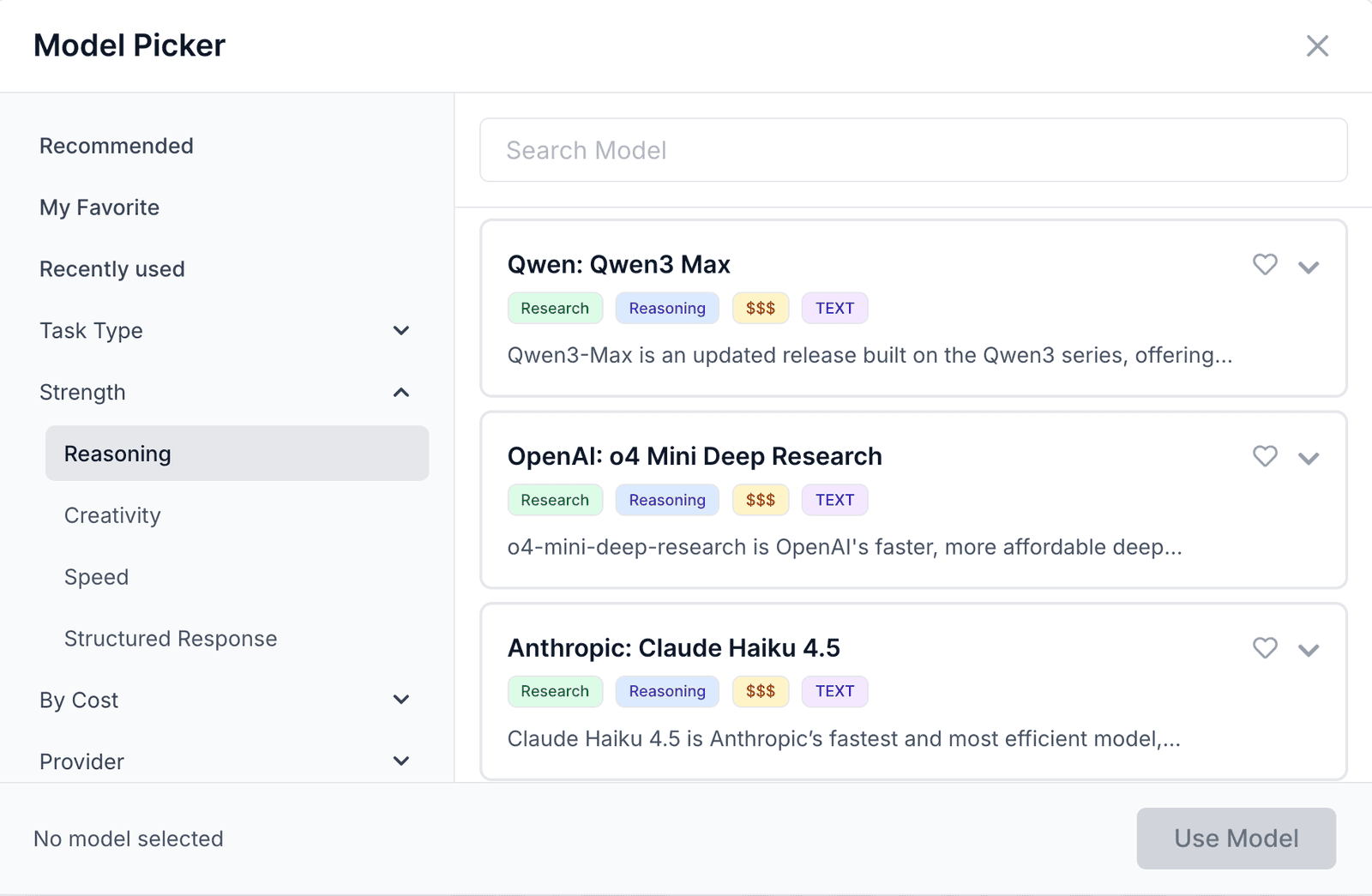

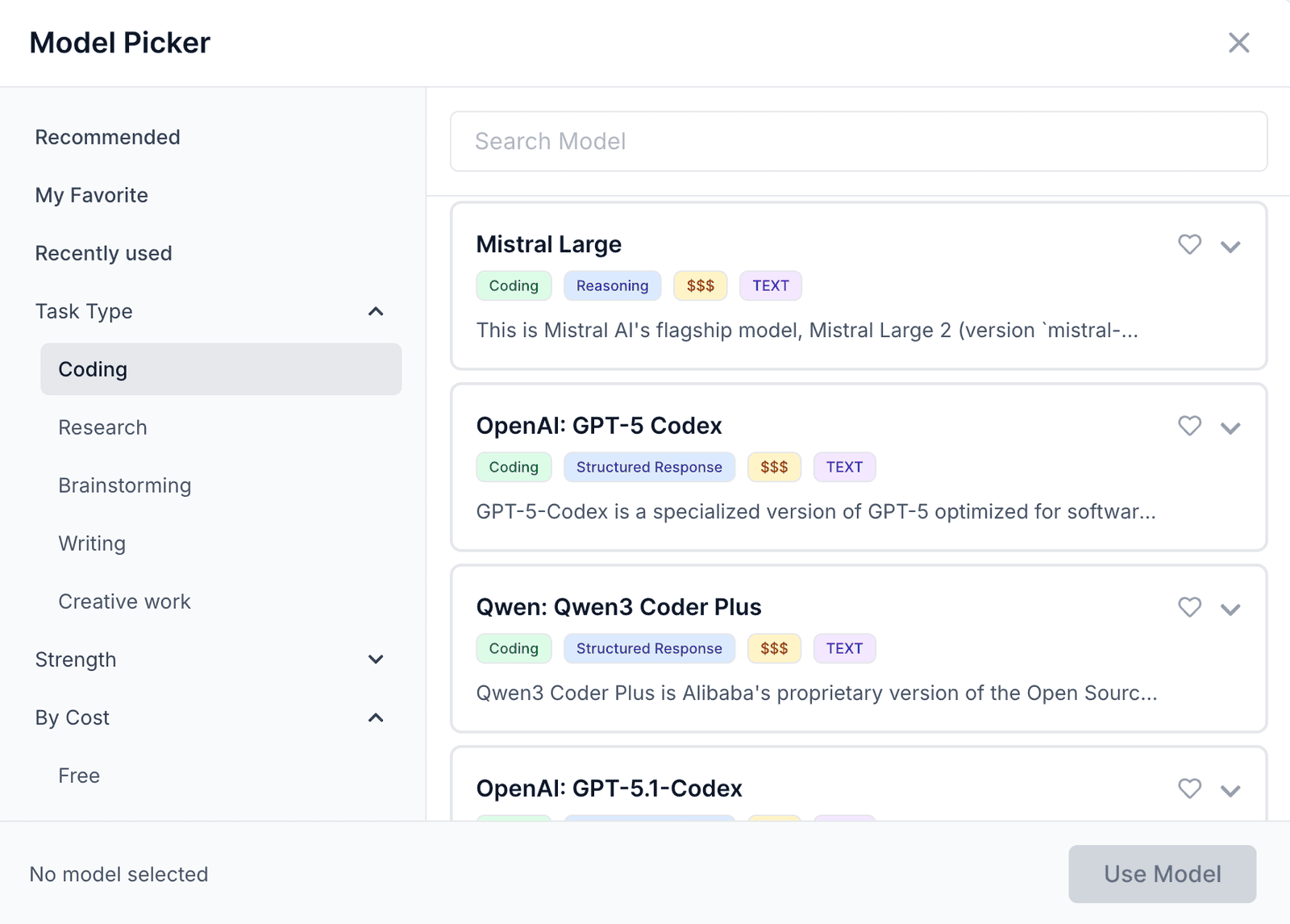

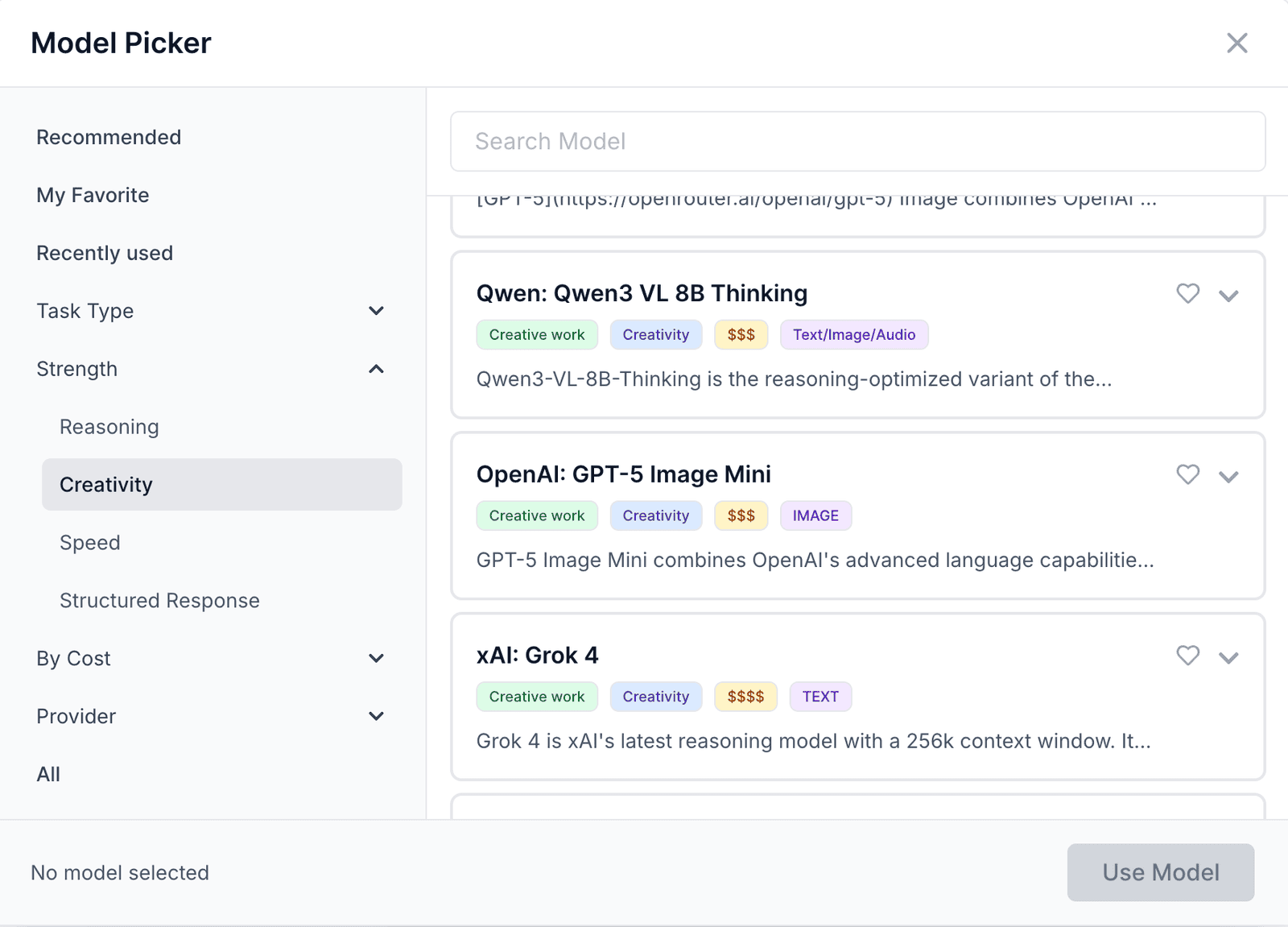

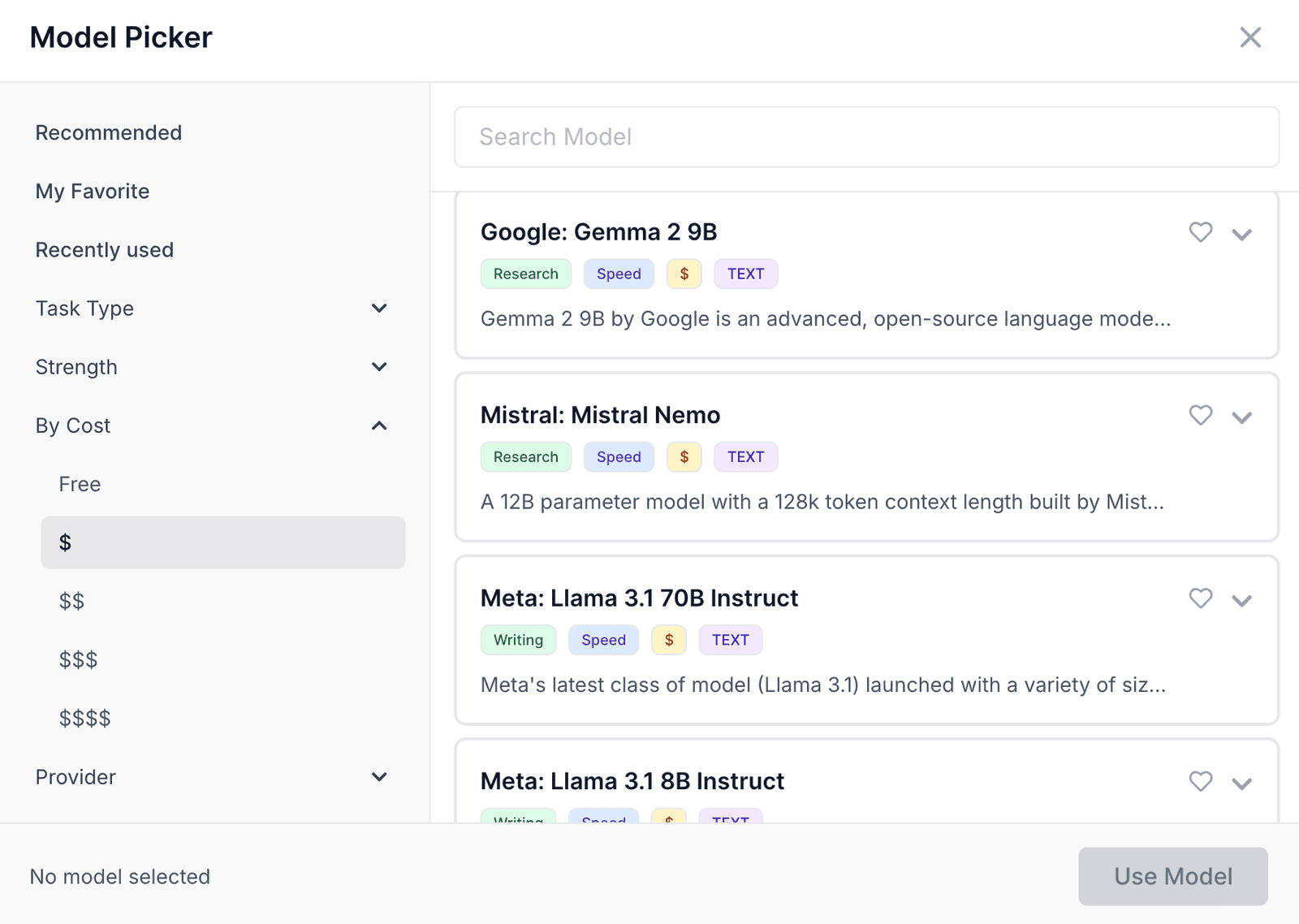

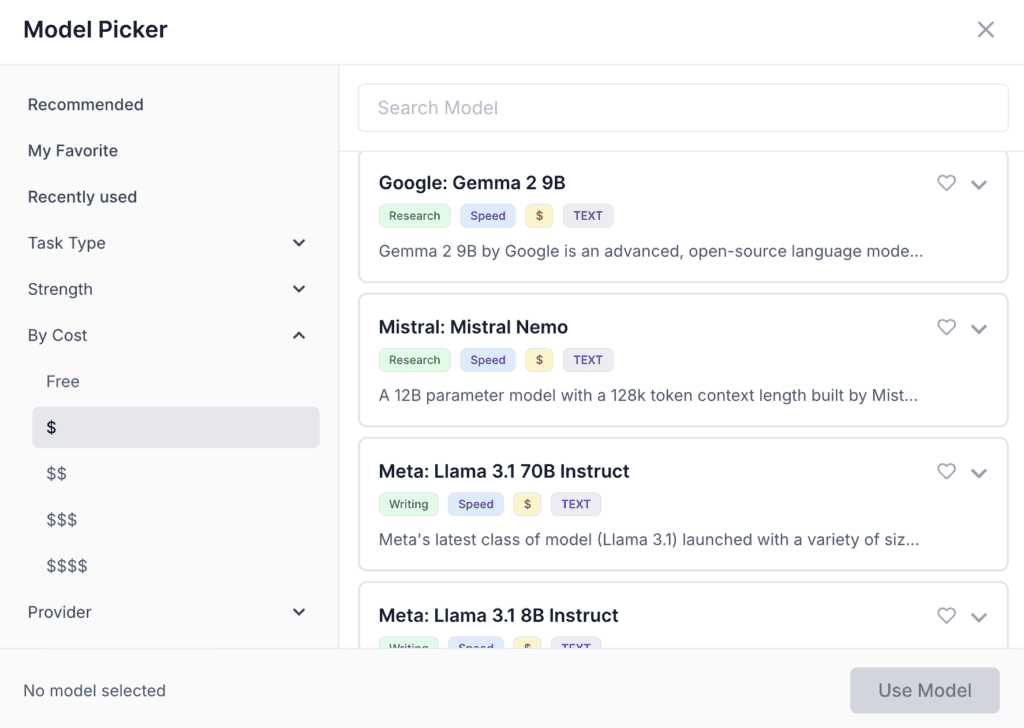

Choose the Best Model for Your Task

By Task Type

By Strength

By Cost

By Provider

The WorkLLM Advantage

Governed Multi-Model Access

Persistent, Cross-Model Context

Structured Exploration, Not Trial-And-Error

One Interface, Many Models

Save upto 90% on AI Cost

Premium reasoning models are expensive, but they’re not needed for every task.

WorkLLM helps teams use cost-effective models where they make sense, reserve premium models for critical work, and control spend with workspace-wide policies — without sacrificing output quality.

FAQs

What does “Multi-LLM Chat” mean in WorkLLM?

It means you can use GPT, Claude, Gemini, Llama, Mistral, DeepSeek, Qwen, and many other models all inside the same chat interface without switching platforms or losing context.

Why would I use more than one AI model?

Different models excel at different tasks. Some are better at reasoning, others at writing, coding, analysis, or creative work. Multi-LLM lets you pick the best model for the job.

Can I switch models in the middle of a conversation?

Yes. You can switch models at any time, re-run a message with another model, or compare outputs without leaving the thread.

Do I lose my chat history when switching models?

No. The entire conversation – prompts, files, and context – stays intact so every model can reuse the same information.

How does WorkLLM help me choose the best model?

You can select models based on task type, strength (reasoning, speed, creativity), cost, or provider. WorkLLM clearly labels these options to make selection simple.

Can I compare two model outputs?

Yes. You can re-run any message with another model and compare the outputs in the same thread to evaluate quality, reasoning style, or accuracy.

Does using multiple models increase cost?

Not necessarily. Many teams reduce costs by using cheaper models for simple tasks and reserving premium reasoning models only when needed — saving up to 90%.

Can admins restrict which models the team is allowed to use?

Yes. Administrators can enable or disable specific models, and enforce governance rules across the workspace.

What is “forking” and why is it useful?

Forking lets you branch off a message to explore a new idea or test a different model without overwriting your original conversation.

Can multiple users collaborate inside a Multi-LLM thread?

Yes. Multi-LLM features are available in both personal and shared chats, letting teams explore and compare model outputs together.

How does WorkLLM handle model updates?

When providers release new model versions, WorkLLM adds them as selectable options without disrupting existing conversations.

How are tokens and costs tracked across different models?

WorkLLM provides model-level usage analytics so you can monitor token consumption per user, team, or model — useful for managing budgets.

Happy Customers

Customer satisfaction is our major goal. See what our customers are saying about us.

“Vivamus sagittis lacus vel augue laoreet rutrum faucibus dolor auctor. Vestibulum id ligula porta felis euismod semper. Cras justo odio dapibus facilisis sociis natoque penatibus.”

Coriss Ambady

Financial Analyst ABC.com

“Vivamus sagittis lacus vel augue laoreet rutrum faucibus dolor auctor. Vestibulum id ligula porta felis euismod semper. Cras justo odio dapibus facilisis sociis natoque penatibus.”

Cory Zamora

Marketing Specialist ABC.com

“Vivamus sagittis lacus vel augue laoreet rutrum faucibus dolor auctor. Vestibulum id ligula porta felis euismod semper. Cras justo odio dapibus facilisis sociis natoque penatibus.”

Nikolas Brooten

Sales Specialist Financial Analyst ABC.com

“Vivamus sagittis lacus vel augue laoreet rutrum faucibus dolor auctor. Vestibulum id ligula porta felis euismod semper. Cras justo odio dapibus facilisis sociis natoque penatibus.”

Coriss Ambady

Financial Analyst Financial Analyst ABC.com

“Vivamus sagittis lacus vel augue laoreet rutrum faucibus dolor auctor. Vestibulum id ligula porta felis euismod semper. Cras justo odio dapibus facilisis sociis natoque penatibus.”

Jackie Sanders

Investment Planner Financial Analyst ABC.com

“Vivamus sagittis lacus vel augue laoreet rutrum faucibus dolor auctor. Vestibulum id ligula porta felis euismod semper. Cras justo odio dapibus facilisis sociis natoque penatibus.”

Laura Widerski

Sales Specialist Financial Analyst ABC.com